Prompt Engineering refers to the process of designing and crafting effective prompts or input instructions to guide the output of the language model. It involves carefully constructing the initial text given to the model to obtain the desired results. This is crucial for obtaining better results because the model’s output heavily relies on the provided prompt.

Prompt Anatomy

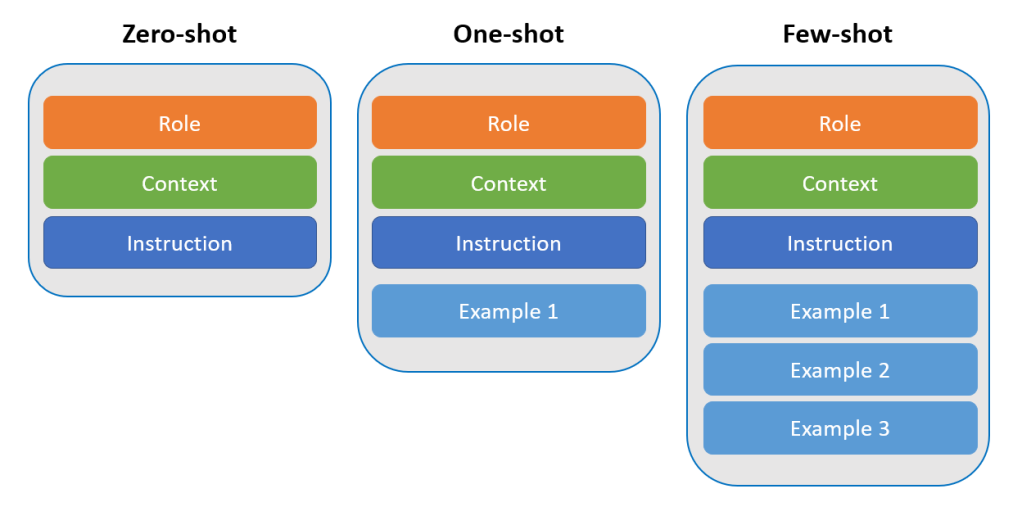

The prompt can be divided into several logical components:

- The role: we aim to assign a role to the AI. For instance: you are a lawyer / a pharmaceutical salesperson / etc.

- The context: we can provide information that will help the AI in writing its response.

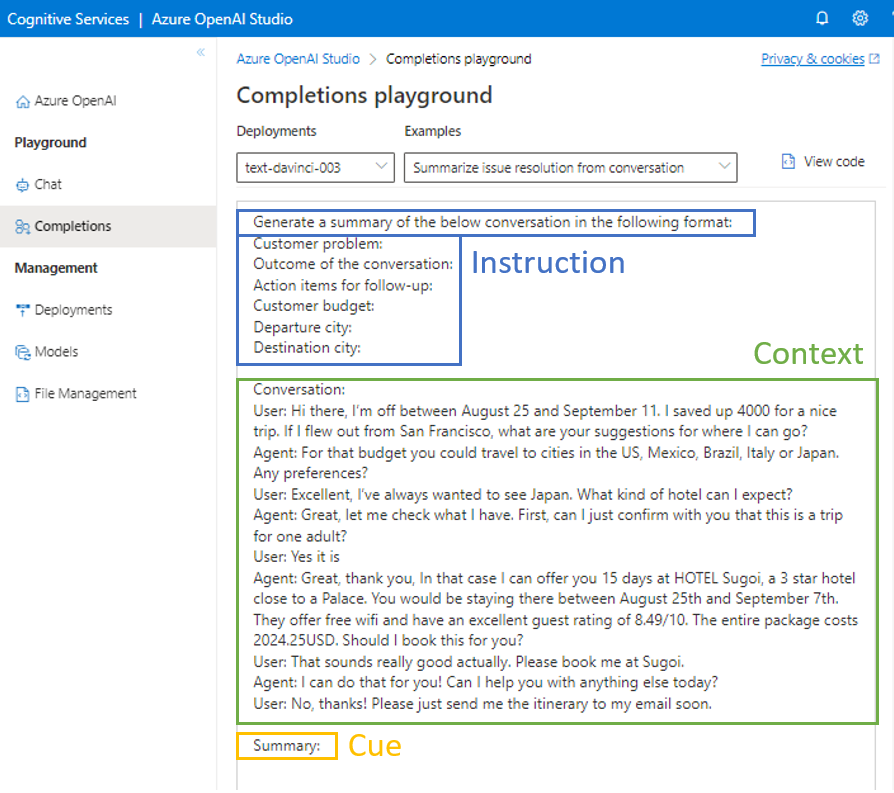

- The instruction: this component can be translated as the answer to the question “What to do?“.

- The example(s): it may be useful to give examples to the model to clarify the expectations (response structure, reasoning to have, tone, behavior…). This can be likened to conditioning (rather than learning).

- The cue: we can guide the model in the completion it will generate. For example: import; select…

We use the terms Zero-shot / One-shot / Few-shot depending on the number of examples you provide to the model to condition its response (0, 1, or several).

Note that there is a difference in the structure of the prompt depending on the OpenAI (or Azure OpenAI) API you are using: Completion or Chat Completion. The Completion API uses a traditional prompt that is formulated as presented above.

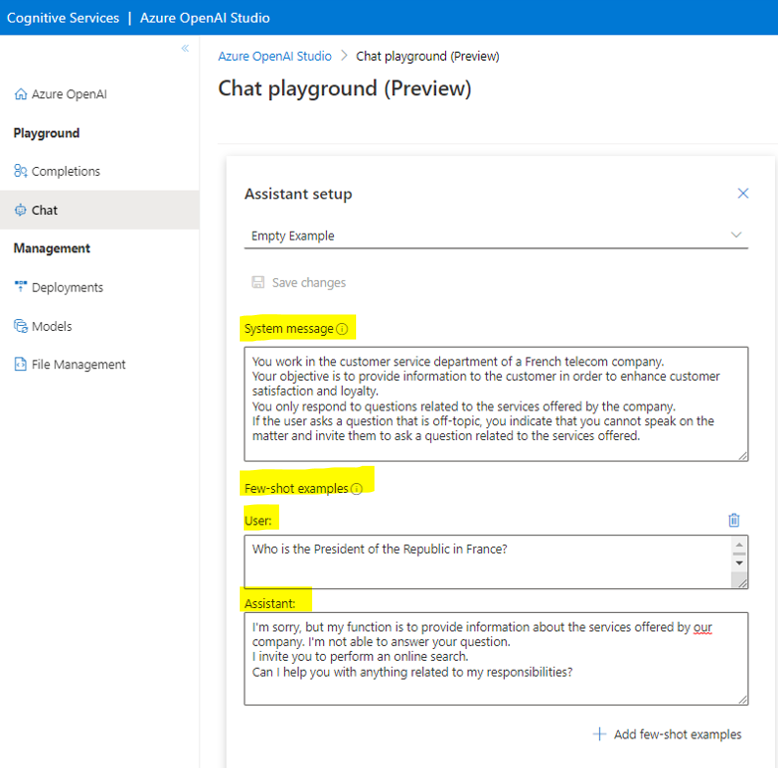

On the other hand, the Chat Completion API is different in the structure of its prompt (mainly in the way information is conveyed). This prompt is divided into 2 parts:

- System message: this is where you will find the basic structure of the prompt seen previously (role, context, instruction…).

- Few-shot examples: examples of user requests and the corresponding responses to be returned.

Here are some tips for writing your prompt:

- Be as specific as possible, leaving little room for interpretation.

- Breaking down the instruction into smaller tasks can be helpful since LLMs perform better on smaller tasks. This aligns with the breakdown of reasoning, which reduces imprecision in the response and increases the understanding of the model’s response.

- Don’t be afraid to repeat yourself between the components of your prompt.

- The order of the components in the prompt is important. We know that LLMs are subject to recency bias (recent information carries more weight in their consideration by the model).

- Give the model an exit strategy: reduce the generation of hallucinations (incorrect response presented as a fact) by instructing the model that it can/should respond with “I don’t know“.

You can find a lot of useful information on Microsoft’s documentation about Azure OpenAI. Another great source of information is learnprompting.

Conclusion

By utilizing prompt engineering techniques, practitioners can shape the behavior and output of the language model, ensuring it generates accurate, relevant, and appropriate responses. Crafting well-designed prompts allows users to control the output style, tone, or specificity, helping to mitigate biases or potential errors in the generated text.

Additionally, prompt engineering enables users to fine-tune the model for specific tasks, optimizing its performance and enhancing the quality of the generated responses.

Overall, prompt engineering plays a vital role in harnessing the full potential of GPT and improving the overall user experience and output quality.

Leave a comment