Recently, I have talked a lot about OpenAI and GPT through various integrations. But even though NLP is the most prominent field with generative AIs, it’s not the only one. Today, I’m going to talk about Computer Vision through OpenAI’s CLIP model and Microsoft’s Florence model and their integration into the new features offered in preview in Azure Vision.

CLIP

CLIP, developed by OpenAI, is a powerful neural network model that stands for “Contrastive Language-Image Pretraining“. Unlike traditional AI models that rely on supervised learning, CLIP is trained using a massive dataset containing image and text pairs (400M). It learned to associate images and their corresponding textual descriptions, enabling it to understand and generate meaningful representations of both images and text.

If you have read my previous post about vector similarity search, it’s a bit of the same principle. We capitalize on the properties of vectors to find meaning between texts and images. This innovative approach allows CLIP to perform a wide range of tasks, including image classification, image generation, and even zero-shot learning, where it can recognize objects without specific training on those objects.

Florence

Florence is a Microsoft multi-modal foundation model, trained with billions of text-image pairs and integrated in Azure Cognitive Service for Vision. The objective behind Florence was to have a model capable of adapting to different types of tasks. In their papers, they have defined 3 dimensions:

- Space : from coarse to fine (classification, detection, segmentation).

- Time : from static to dynamic (image to videos).

- Modality : from visual to multi-sense (depth, captions).

Florence was pre-trained using a large image-caption dataset. The training was to pick which images belong with which captions (same contrastive learning used in CLIP). Florence expands the representation to support object level, multiple modality, and videos respectively.

Before Florence, each service was trained disjointly, resulting in high cost and slow deployments; however, with its unified vision services, Florence reduces costs and accelerates deployments.

News on Azure Vision

The integration of Florence within Azure Cognitive Services has enabled the development of new features (in Preview).

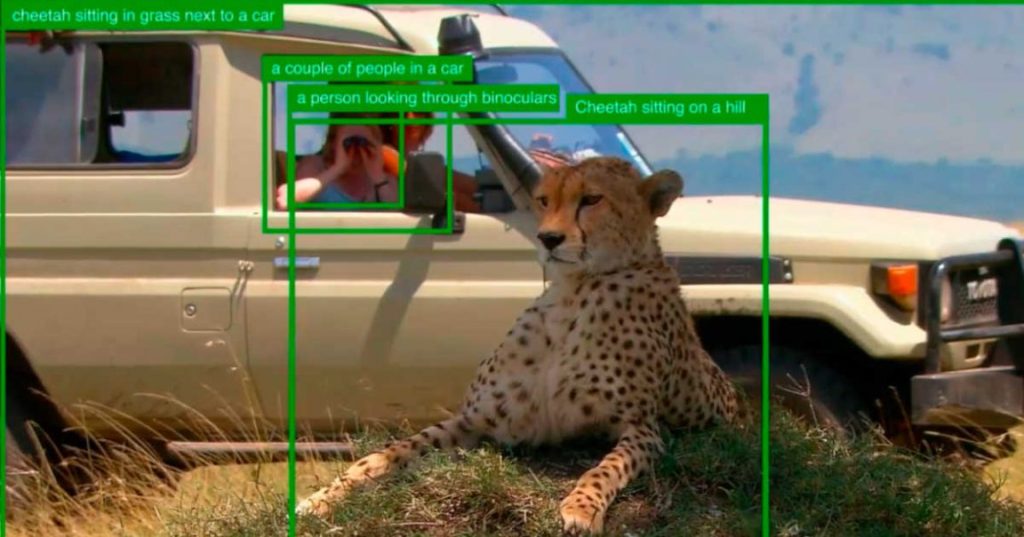

Dense captions : deliver rich captions, design suggestions, accessible alt-text, SEO optimization… The video below illustrates this feature. The different captions overlap to provide a detailed description of the scene.

Image retrieval : improve search recommendations and advertisements with natural language query : request for private preview. The diagram below explains the uses of vectorial similarity to retrieve relevant images. The video shows an example on Azure Studio interface.

Background removal : segment people and objects from their original background.

Model customization : lower costs and time to deliver custom models that match business use-cases.

Video summarization : search and interact with video content; locate relevant content without additional metadata : request for private preview.

The features presented are available in Azure Vision Studio. You can try them out for yourself.

Integration in M365 Apps

With the idea of infusing AI into its tools, Microsoft is integrating Vision Services into its M365 apps (Teams, PowerPoint, Outlook, Word, Designer, and OneDrive) and using them in the Microsoft Datacenter to enable innovative features, including segmentation capabilities in Teams for virtual meetings, image captioning for automatic alt-text in PowerPoint, Outlook, and Word to enhance accessibility, improved image tagging and search in Designer and OneDrive for better image management, and leveraging Vision Services in Microsoft Datacenters to enhance security and infrastructure reliability.

Leave a comment